In this article, discover how Agentic AI is revolutionising FP&A by automating forecasting, budgeting, and variance...

Why Trust Is Architecture, not a Feature

According to Hackett Group [1], 89% of executives want to advance AI, but AI models are getting more complex and harder to interpret. This creates a "black box" issue for FP&A teams. Gartner [2] predicts that over 40% of agentic AI projects will be cancelled by 2027 due to rising costs, unclear value, or poor risk controls. These risks can snowball, making it vital for FP&A teams to balance ROI with explainability and control.

Match Process and Technology

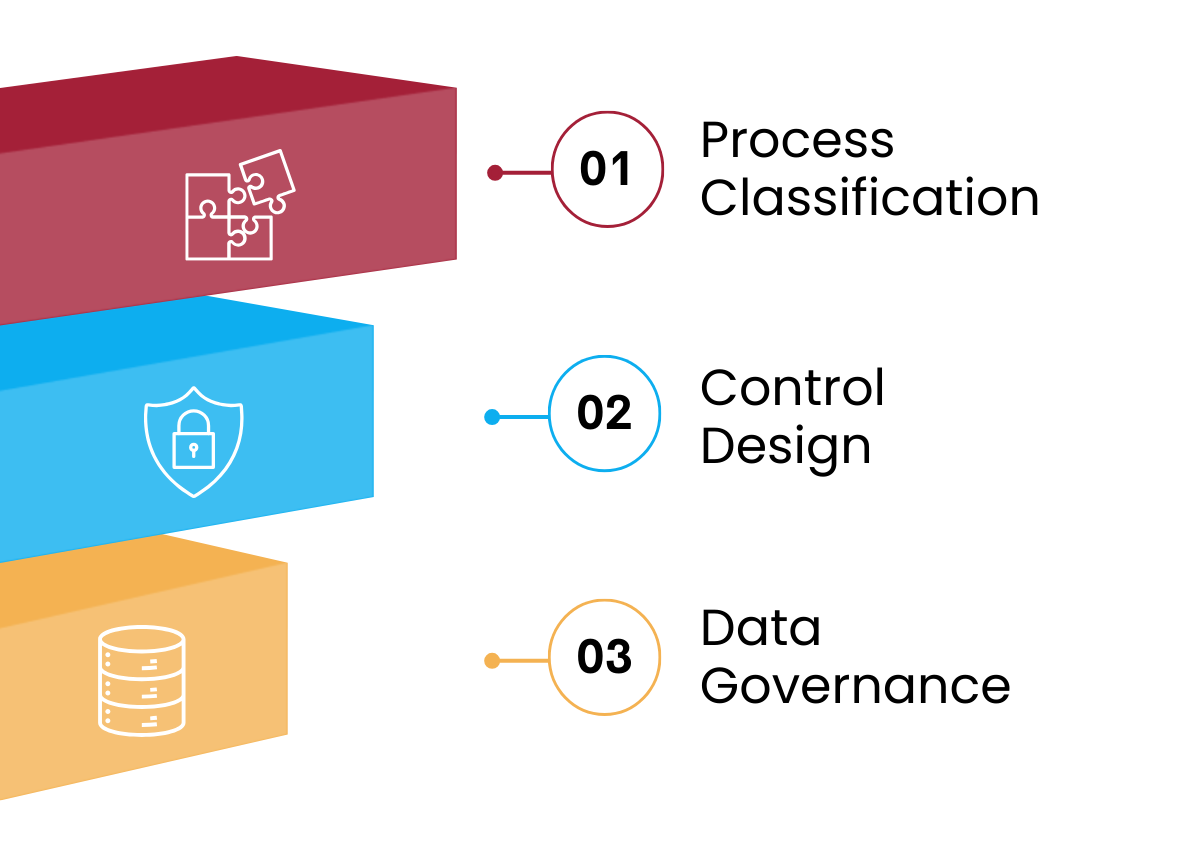

AI's probabilistic nature poses challenges for finance, where accuracy and consistency are essential. Finance AI is most effective when precision meets scale. Selecting processes suitable for AI transformation and pairing them with the right technologies helps avoid "black box" issues. As Boston Consulting Group [3] notes: "Using AI where it's about language, not math." This distinction is foundational. Finance operations typically fall into three categories, each needing a tailored tech approach:

Pattern Recognition and Synthesis (AI Suitable): Key characteristics are unstructured inputs, a need for pattern recognition and probabilistic outcomes. Typical FP&A tasks could include variance analysis, scenario planning or identification of ABC cost allocation keys.

Lufthansa used McKinsey's Spendscape platform [4] to unify data from over 20 ERP systems, leveraging AI to spot spending patterns, pricing trends, and procurement inefficiencies. The system analyses invoices and purchase orders to detect anomalies, improve supplier negotiations, and track CO2 emissions for sustainability reporting. This approach enabled Lufthansa to optimise costs and gain clear emissions data, showcasing AI's ability to synthesise unstructured data into actionable insights.

Exact, Reproducible Outcomes (Traditional Automation): These processes demand deterministic calculations, reproducibility, and auditability, relying on rules-based logic. Traditional automation, such as Python, RPA or data analytics platforms, delivers superior results. When auditors review the close, they need to trace every number to source documents. AI's probabilistic nature introduces unnecessary risk here.

EY [5] improved its General Ledger posting process using Microsoft PowerPost, standardising journal entries with Power Apps forms, automating approval workflows, and leveraging SAP’s validation engine for auditability. Copilot assists, but rule-based logic and Dataverse ensure a secure, fully auditable trail, minimising AI-related risks to financial integrity.

Strategic Judgment (Human Decision-Making): These processes demand contextual understanding and accountability that cannot be delegated to algorithms. AI can analyse historical data and model scenarios, but final judgment requires professional expertise that regulations assign to humans. This may include accounting policy decisions, impairments or control design. AI may assist by analysing historical data, but human review and accountability remain crucial.

A master's thesis from Imperial College London [6] demonstrates this principle in practice, developing a knowledge assistant to interpret IFRS sustainability standards using synthetic data and advanced AI. A fine-tuned model answered 93.5% of industry-specific questions correctly, while a document-backed system scored 85.3%. This shows AI can aid IFRS interpretation with traceable, authoritative answers.

AI Control Design

To ensure the successful implementation of agentic AI, FP&A can make a crucial contribution by designing robust control systems governing autonomous systems that plan, execute, and adapt across multiple interactions. This requires understanding a fundamental shift in human-AI collaboration. Human oversight points must be designed into agent architecture, not added as afterthoughts.

Human oversight involves three key elements for each workflow. Approval gates identify which decisions need human sign-off based on their importance, reversibility, and compliance needs. Escalation triggers set conditions, like value thresholds or low confidence scores, that prompt agents to seek human input. Override protocols specify how humans can efficiently intervene when necessary, ensuring quick action without disrupting everyday agent tasks.

Deloitte [7] identifies two main operating models.

Human-in-the-loop: Humans control digital tools, including AI, initiate actions and make decisions using AI insights. This is common in finance, where analysts use AI for analysis but execute decisions themselves.

Human-on-the-loop: AI operates autonomously, with humans supervising and intervening as needed. Humans set parameters, monitor performance, and handle exceptions.

Effective AI governance requires two monitoring layers. Real-time alerts catch immediate failures, an agent attempting unauthorised data access or generating costs beyond thresholds. Trend analysis identifies gradual degradation, like forecast accuracy declining over time or exception rates creeping upward.

Agentic AI for Activity-Based Costing integrates Machine Learning to analyse operational data and identify cost drivers that traditional models may overlook. AI learns cost drivers from historical data, reducing reliance on manual assumptions and enabling dynamic updates as conditions change. After AI identifies patterns, rules-based automation calculates cost allocations and keeps audit trails, with humans validating driver relevance. This approach ensures FP&A benefits from AI-driven insights without sacrificing control.

Ground AI in Verified Data

To successfully implement AI in FP&A, robust data governance is crucial. KPMG's 2025 study [8] found that 62% of organisations see weak data governance as the main barrier to AI adoption. Managing data requires tracking its origin, usage, and control points, with separate sets dedicated to training, validation, testing, and production. For AI, data ownership also includes documenting data provenance and model details.

A specific risk with AI is hallucination. Models predict the next word based on probability, rather than delivering precise answers. This can cause outputs presented as fact or grounded inference that are not supported by the system’s training data, input data, or verifiable external sources within the defined operating context. Results from FailSafeQA benchmark [9] showed that even the best model invented information in 41% of cases when it lacked sufficient context.

Retrieval-Augmented Generation (RAG) reduces hallucination by forcing AI to cite sources before answering. The system searches a curated knowledge base. RAG is most effective for qualitative synthesis: interpreting policies, summarising trends and analysing narrative disclosures. It struggles with numerical extraction and calculations, where deterministic tools remain superior [10].

Agentic AI reduces hallucinations by integrating rules-based automation with AI via orchestration systems that assign tasks to the most appropriate methods. Rules handle calculations, while FP&A teams use AI for analysis and pattern recognition. SQL agents convert natural language into data warehouse queries. This structure separates responsibilities so that code and queries yield numbers rather than language predictions.

Monday Moves: From Insight to Action

Four actions FP&A leaders can take:

Audit your AI portfolio against the process classification framework. Decompose target processes into discrete tasks. This shows where agents add value and what controls each task needs. Are you applying probabilistic AI to deterministic tasks? Document where category mismatches exist.

Map control points for your highest-priority AI application. Where do humans approve? What triggers escalation? Can you explain the approval logic to your auditors?

Test your data foundation. Can you trace AI inputs to source systems? Do training, validation, and production data remain separate? If not, start systematic lineage documentation for high-risk AI applications, and establish clear ownership and documentation.

Calculation routing. Do you enforce that calculations route to deterministic tools (SQL queries, code execution) rather than allowing probabilistic generation?

Trustworthy AI rests on three architectural foundations:

process design matching technology to task,

control boundaries embedded from the start, and

verified data governance throughout.

FP&A leaders who build these systematically transform AI from a "black box" to a boardroom asset. 60% of agentic AI projects that survive to 2027 share a common trait: better architecture. The distinction becomes clear the moment you audit your first AI workflow.

Share your experiences in the comments: what stopped your pilots from reaching production, or what governance choices enabled success?

Sources

1. Griffin, V., & McNabb, K. (2025). 2025 CFO Agenda: Gen AI takes center stage. The Hackett Group. https://www.thehackettgroup.com/insights/2025-cfo-agenda-2501/

2. Gartner (June 2025). Gartner Predicts Over 40% of Agentic AI Projects Will Be Canceled by End of 2027. https://www.gartner.com/en/newsroom/press-releases/2025-06-25-gartner-predicts-over-40-percent-of-agentic-ai-projects-will-be-canceled-by-end-of-2027

3. Stange, S., Roos, A., Rodt, M., Demytennaere, M., & Arnoldsen, A. (June 2025). How to Get ROI from AI in the Finance Function. Boston Consulting Group. https://web-assets.bcg.com/pdf-src/prod-live/how-finance-leaders-can-get-roi-from-ai.pdf

4. McKinsey (May 2024). How Lufthansa is using data to reduce costs and improve spend and carbon transparency. https://www.mckinsey.com/capabilities/operations/how-we-help-clients/how-lufthansa-is-using-data-to-reduce-costs-and-improve-spend-and-carbon-transparency

5. Microsoft (September 2025). EY redesigns its global finance process with Microsoft Power Platform. https://www.microsoft.com/en/customers/story/25263-ey-global-services-limited-power-apps?utm_source=chatgpt.com

6. Lovin, M.F. (2025). LLMs to support a domain specific knowledge assistant (master’s thesis, Imperial College London). arXiv. https://arxiv.org/abs/2502.04095

7. Deloitte (December 2025). The measured leap Appraising AI agent impact with agent operations. https://www.deloitte.com/content/dam/assets-zone3/us/en/docs/services/consulting/2025/ai-agent-observability-human-in-the-loop.pdf

8. KPMG (2025) Data governance in the age of AI. https://kpmg.com/kpmg-us/content/dam/kpmg/pdf/2025/data-governance-age-ai.pdf

9. Writer team (February 2025). Expecting the unexpected: A new benchmark for LLM resilience in finance. FailSafeQA. Writer Engineering. https://writer.com/engineering/failsafeqa-benchmark/

10. Pisanschi, B. RAG for Finance: Automating Document Analysis with LLMs. CFA Institute Research & Policy Center. https://rpc.cfainstitute.org/research/the-automation-ahead-content-series/retrieval-augmented-generation?utm_source=chatgpt.com

Subscribe to

FP&A Trends Digest

We will regularly update you on the latest trends and developments in FP&A. Take the opportunity to have articles written by finance thought leaders delivered directly to your inbox; watch compelling webinars; connect with like-minded professionals; and become a part of our global community.