In this article, the author explains how AI is transforming FP&A from a reporting function into...

Introduction

Finance transformation is often described as a shift from transaction processing and stewardship towards insight and strategic partnering. One useful shorthand is:

- Bookkeeper - transactional / scorekeeping

- Controller - control and reporting

- Business Analyst - decision support

- Strategic Partner - enterprise value

This is achieved by earning trust through value and governance.

This article’s thesis is that AI is on a similar trajectory. It will move beyond automation and reporting towards decision contribution, but it will only earn “partnership” status through the same mechanisms that promoted the Finance function: demonstrated value plus explicit governance.

The practical CFO question is therefore not “Can AI do more?” but “How do we structure a partnership where AI can propose and test, while humans retain authority, controls, and an audit trail for material decisions?”

In this article, ‘partner’ means: a system that can propose actions, test them against agreed criteria, and present evidence, while humans retain authority and accountability.

The AI Maturity Model

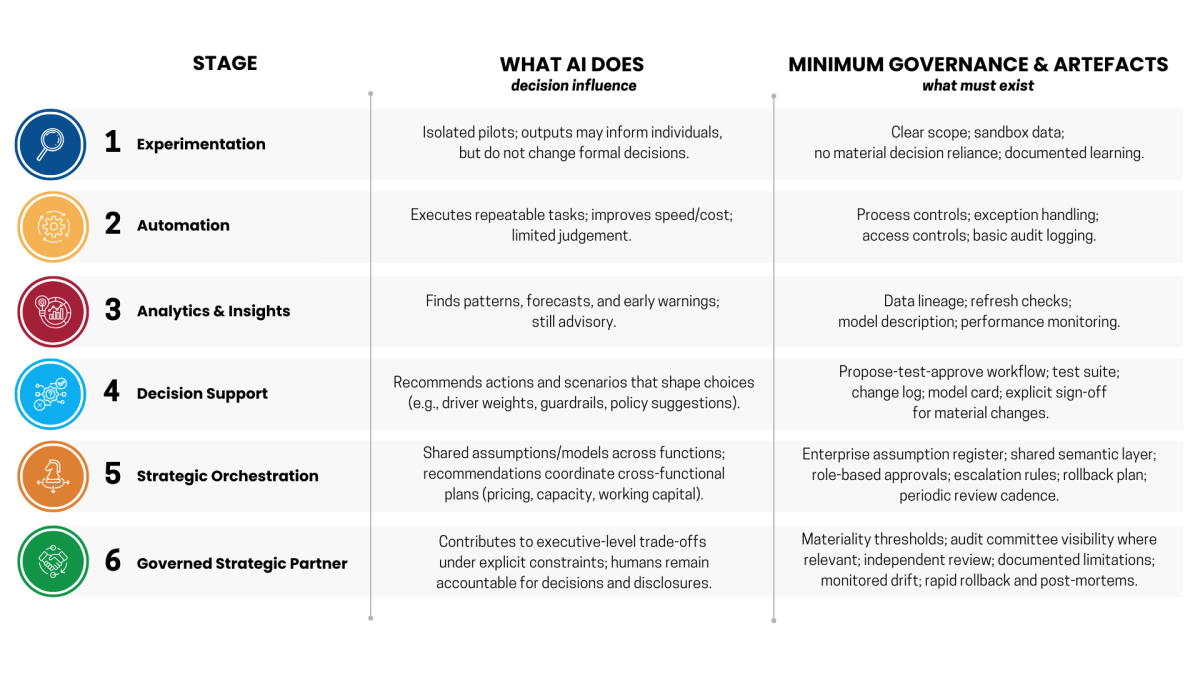

A practical maturity curve for AI in FP&A can be expressed as six stages. The key point is not the label, but the change in decision rights and governance artefacts as you progress.

Figure 1: The AI Maturity Model

Observation: many organisations sit between stages (2) and (3). The real shift is (3→4): not ‘better models’, but a better operating model; AI is allowed to propose and test, while humans keep accountable sign-off.

What This Means for FP&A Leaders

A CFO or Head of FP&A can use the maturity model as a practical governance checklist. Key takeaways:

Diagnose your current stage honestly. Many teams are effectively at stages 2 to 3, even if the rhetoric is “AI-driven decisions”. Ask: “Do AI outputs ever change a formal decision without explicit sign-off and an audit trail?”

Treat the 3-4 transition as an operating model change. Stage 4 is where AI begins to shape choices. Progress depends less on clever algorithms and more on decision rights, approval gates, and repeatable propose-test-approve workflows.

Write down decision rights and materiality thresholds. Specify what AI may propose, who approves, what counts as material, and what tests must be passed before a change goes live (and when escalation is required).

Insist on minimum governance artefacts before scaling. At a minimum for material decisions: data lineage and refresh checks, documented tests and acceptance criteria, versioning and rollback, a short model card, monitoring for drift, and a change log.

Governance accelerates adoption when it is proportional. Governance is not bureaucracy; it is the mechanism that allows more experimentation safely. Calibrate rigour to materiality: stronger controls where consequences are larger.

Build collaboration capability, not just tooling. Leaders need the skill to brief AI, challenge its suggestions, and override when judgment matters. Early projects should be treated as training in governed collaboration, not just output generation.

Closing

An AI partnership is achievable when roles, tests, and approval gates are deliberately designed: AI proposes and tests; humans decide and remain accountable.

Part 2 applies this governance-first frame to a real decision system, showing how propose-test-approve cycles, operating environment sensing, and controlled model evolution work in practice.

Subscribe to

FP&A Trends Digest

We will regularly update you on the latest trends and developments in FP&A. Take the opportunity to have articles written by finance thought leaders delivered directly to your inbox; watch compelling webinars; connect with like-minded professionals; and become a part of our global community.